Over the past month, we’ve seen AI coding tools begin to take on multi-step development tasks end to end. GitHub Copilot can now draft features, suggest fixes, run tests, and iterate on code within the developer workflow. Anthropic’s Claude Code has demonstrated similar multi-step execution—planning changes, writing and revising code, and validating results—while humans supervise or even sleep. And OpenAI described its new GPT-5.3-Codex as “instrumental in creating itself.”

Meanwhile, most enterprises are still using models as question-answering tools. That gap—between what models are now capable of doing and how they’re actually being used at work—is growing fast.

The next phase of AI is a shift from standalone models toward agentic systems of work. In 2026, AI will increasingly plan, act, verify, revise, and deliver. Until now, the biggest challenge around bringing AI to work has been simply getting people to use it. Going forward, it becomes an operating question: how to structure, coordinate, and govern work when tasks and entire end-to-end processes are executed autonomously by agents. Model quality will still matter, but what will matter most is ensuring those models exist within a system that’s designed to deliver high-quality outcomes consistently, securely, and at scale.

We’re already bringing these capabilities into Microsoft Copilot—expanding it from task assistance toward coordinated execution within the tools people use every day.

Planners and workers get the job done

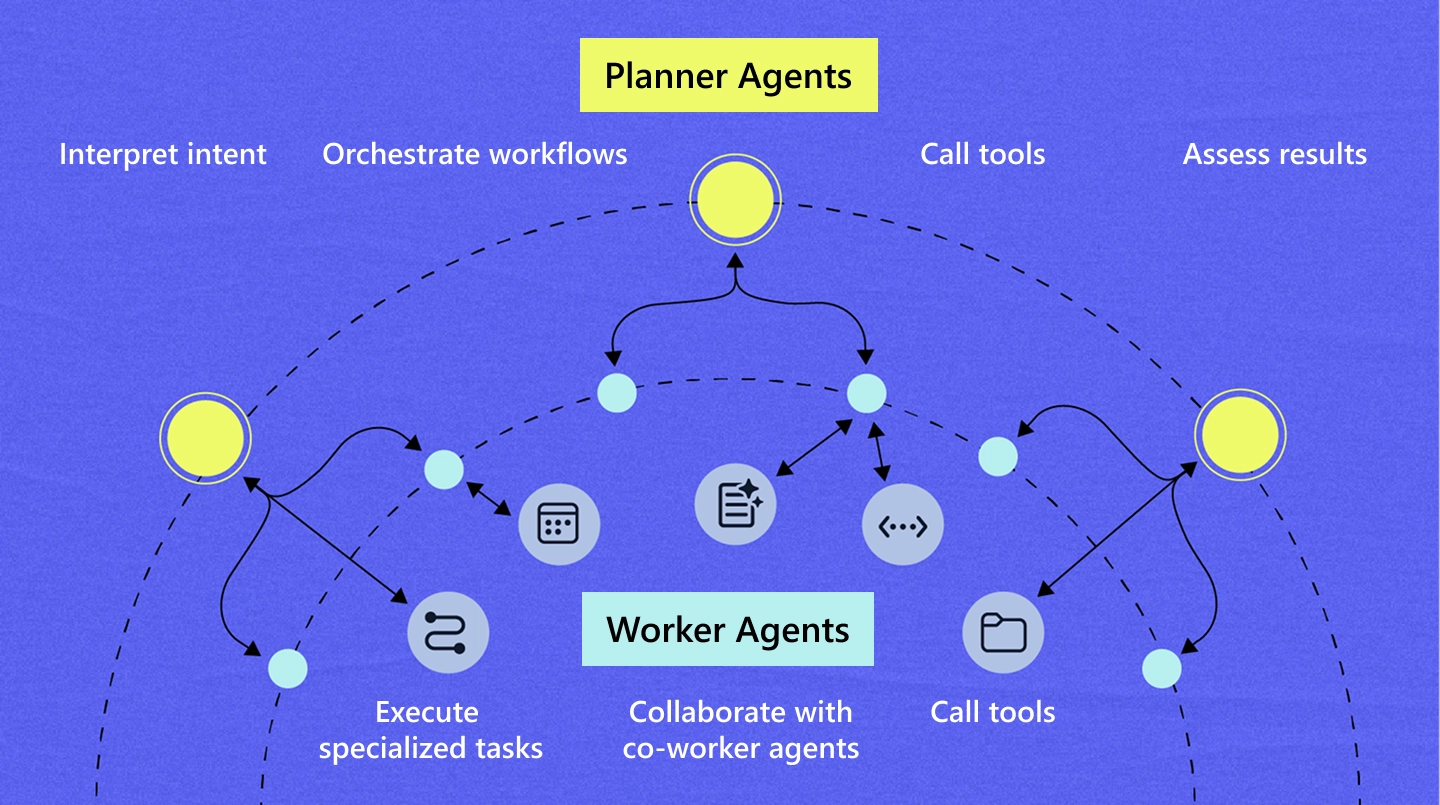

An agentic system of work is an array of agents, supported by tools, set up to collaborate and complete tasks. At a basic level, these systems have two roles: planners and workers. An outer loop of planner agents takes a goal, breaks it into steps, and assigns those steps to worker agents or deterministic tools—like a database or calculator—to complete a task. An inner loop of worker agents executes those steps—writing code, analyzing data, taking actions—using tools where precision and repeatability matter. Planner agents then check progress and decide what happens next.

This structure is relatively simple, but it’s crucial because it allows AI systems to stay oriented over time to plan, execute, check their work, and recover when something goes wrong. You can see elements of this in GitHub Copilot today. A developer can describe a change, have Copilot generate the implementation, run tests, respond to failures, and iterate—all within the development environment. These tools are still human-guided, but they show how multi-step execution is becoming embedded directly into workflows. Claude Code has demonstrated similar multi-step execution outside the IDE, making clear where this is headed.

Truly agentic systems of work will enable entire functions to move from task-by-task assistance to end-to-end execution—from marketing and finance to operations and customer support.

From assistants to agentic systems of work

Planner and worker agents coordinate to move from goal to outcome—executing end-to-end tasks without humans orchestrating every step.

From expertise to execution

The real unlock from an agentic system of work is the ability to move from goal to outcome without humans coordinating every step. That’s what levels up AI from assistant to operational layer.

This shift makes the problem most organizations are running into clear. AI isn’t stalled because the models can’t do more. It’s stalled because work is still designed around humans calling all the shots. As a result, leaders are seeing a lot of activity—drafts, pilots, experiments—but very little compounding progress.

Until workflows change, progress will stay incremental, no matter how capable the technology becomes.

In practice, a system of work behaves less like a single tool and more like a well-run team: A goal enters the system. A coordinating layer translates it into steps, routes each step to the appropriate agent, evaluates outputs against defined criteria, and determines what needs to happen next. If something fails, the system retries, escalates, or solicits human input. When something works, the system captures what it learned—successful approaches, useful signals, resolved edge cases—and uses that context to inform the next run.

This is the direction Copilot is evolving toward: from isolated task assistance to coordinated execution across workflows.

What it all means for leaders

We’re moving from models that know to systems that execute—a fundamentally different category of change. It’s a rewiring of how work gets done.

Most leaders don’t start with a clear map of their workflows. That’s normal. Work has accreted over years across teams, tools, and handoffs that no one fully owns. The practical starting point isn’t to redesign everything, but to start with one recurring outcome and trace how it actually gets done. Whether it’s shipping a campaign, closing a ticket, or releasing a feature, ask questions:

Where does the work get delayed?

Where do humans step in just to move things along?

Where does progress stall because workflow coordination lives in people’s heads?

Agentic systems of work matter because they make those weak points visible for redesign. They give leaders a way to move from siloed AI experiments to operational mechanisms that can carry work forward. That’s how this shift becomes actionable: one workflow at a time.

For more insights on AI and the future of work, subscribe to this newsletter.